The future of responsible AI: Bridging the gap with responsible AI audit tools

Responsible AI audit tools are moving from niche compliance checkboxes to strategic infrastructure. Regulation – particularly the EU AI Act – makes explainability and traceability non-negotiable. And organisations that treat audits as an afterthought could face fines and risk to their reputation. We built some demo software to explore and refine our thinking – here’s a snapshot of what we found.

Why auditability matters

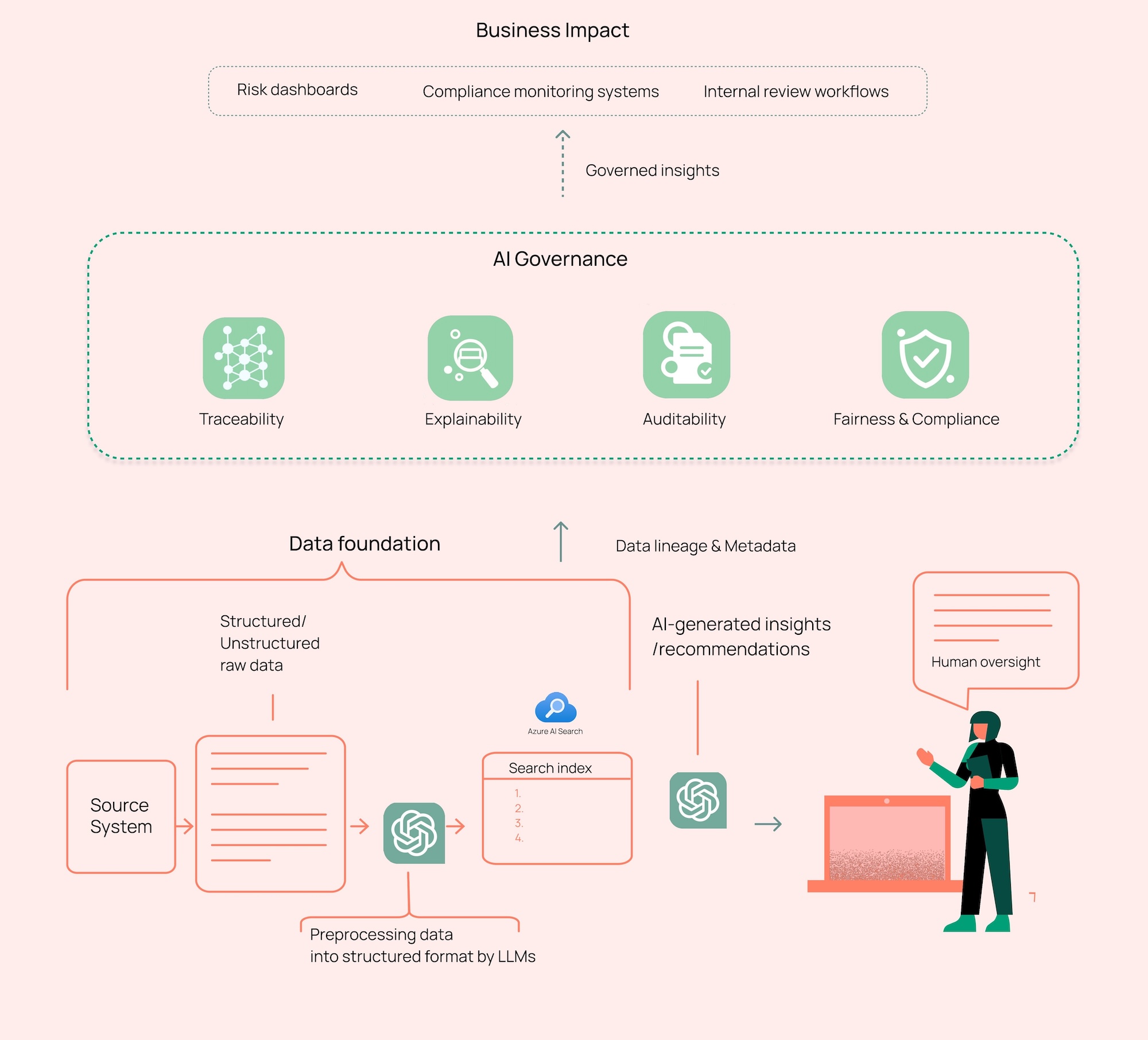

Think of an AI system as a fast-moving supply chain: data is supplied in, models transform it, outputs are delivered. If anything goes awry, it’s valuable to be able to reconstruct the provenance of an output. That’s traceability. And clear, human-readable reasons for decisions? well, that’s explainability. Finally, you need a tamper-evident record — that’s auditability. Together, those three form the backbone of trustworthy AI.

Regulation accelerates the need, but business incentives are just as strong. In the financial sector, for example, being able to show how a credit decision was reached can improve customer trust. And there is a halo effect here – just by signalling the ability and confidence to deliver audited decisions can develop a brand’s trustworthiness. It doesn’t mean every last thing needs to be audited.

Why pure automation approaches fall short

Many focus on automating tests and flagging bias – and this is useful. Automation without transparency creates a second-order problem, however. You get alerts without an audit trail to explain to a regulator or business leadership. Automation that hides provenance is particularly brittle when legal teams or customers demand explanations. From what we’ve seen in our work, the most resilient approaches combine automation with transparency — lightweight, inspectable artefacts such as versioned data snapshots, traceability graphs, and decision-flow visuals that non-technical stakeholders can follow.

These artefacts don’t need to be complex — they just need to make the system’s reasoning and provenance visible enough for humans to trust it.

Our conceptual demo: xAI pillars for traceability, explainability, and auditing

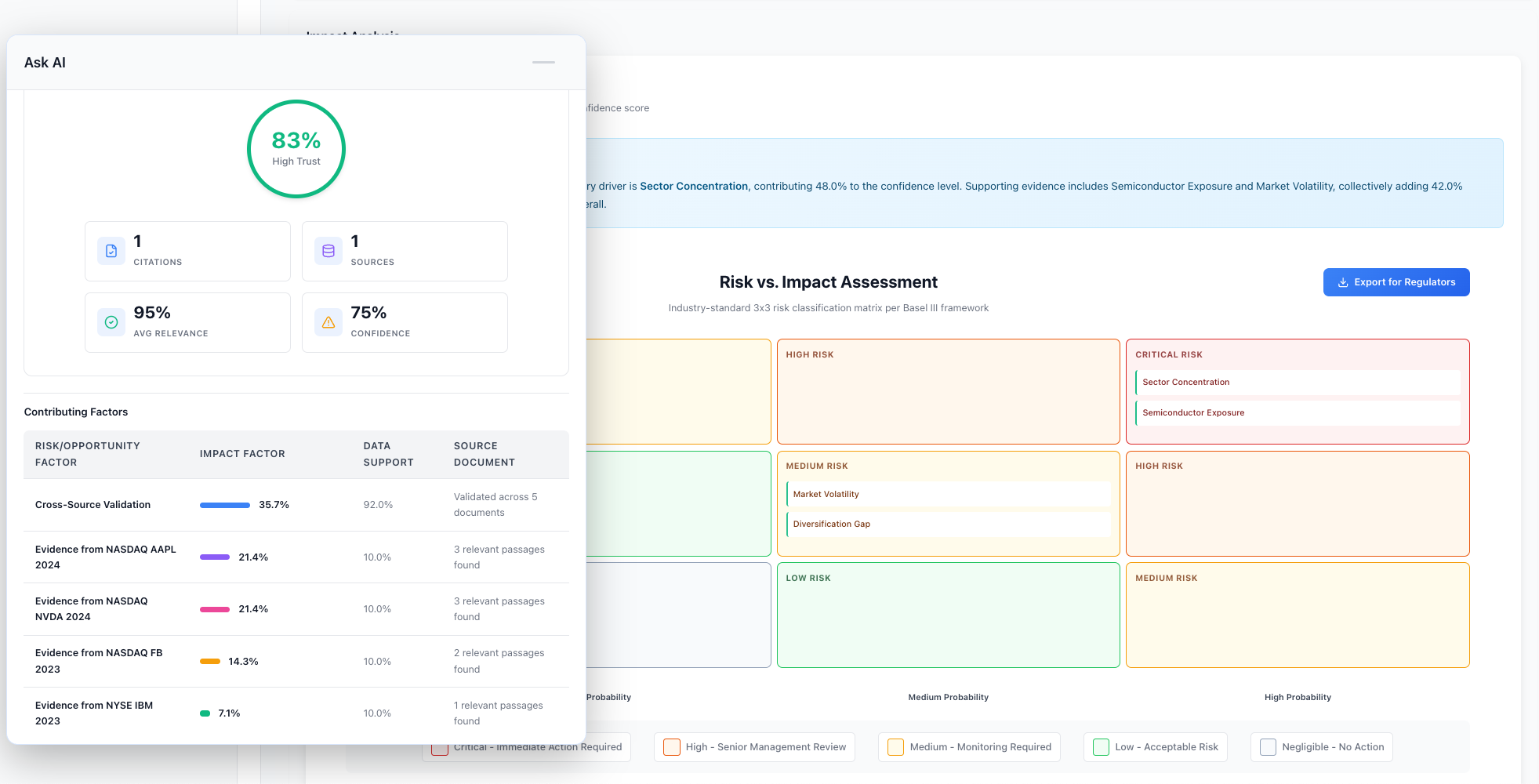

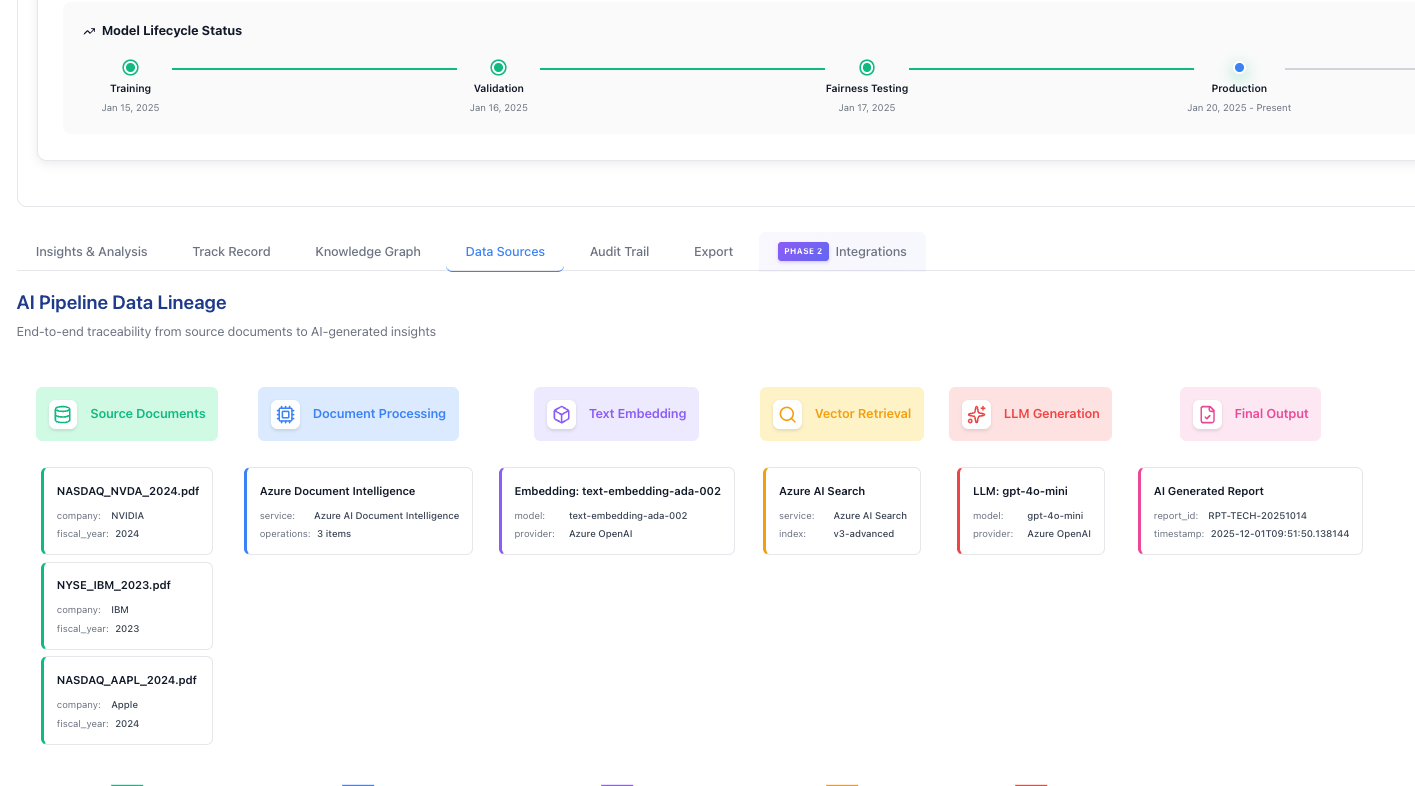

To make this more tangible, we built a conceptual demo on top of Futurice’s own productionised RAG pipeline toolset (BRAGLib, see more here) to illustrate what responsible AI governance could look like in practice. Think of it as a thin xAI layer that could sit between model endpoints and business applications. It doesn’t replace models, but reveals how they operate. In this prototype, every AI-generated insight can be traced across several dimensions — from the source data and signals that informed it, through the models and transformation steps that shaped it, all the way to the human reviews or adjustments that approved or adjusted the final output.

These relationships are represented with three complementary views:

- A provenance timeline, illustrating where each input originated

- A decision-flow view, outlining how the model’s reasoning unfolds

- A trace graph, linking data, model actions, and human interventions across the decision lifecycle

The practical pillars are the same across varied implementations: capture provenance, provide layered explanations for different audiences (operators, compliance, customers), and export machine-readable audit artefacts for regulators. You can optimise for performance by sampling or by computing richer explanations only for flagged cases — but the core traces must be available.

This is a conceptual prototype built on a live RAG foundation — designed to explore what responsible AI governance might look like in practice. Here are a few screenshots of the software, which has helped hone our thinking about use cases, user needs, and technical approaches.

Making auditability part of product DNA

Auditability is not a separate project; it’s a product requirement. Start by mapping the most sensitive decision paths and agree on the minimum evidence you need for them. Build that evidence into pipelines early. Use human-centred explanations so business owners can sign off on decisions quickly. And measure the cost of not having traceability — dispute times, compliance overhead, lost customer trust — then compare that to the modest upfront effort of instrumenting traces. We’ve helped organisations turn these steps into measurable outcomes: faster regulatory response times, clearer SLA definitions for models, and reduced time to triage incidents.

If you want to see how a conceptual xAI layer could fit into your stack, we’re ready to showcase our demo – using this to help map out how to satisfy auditors in your business context, and improve your specific product.

Matthew EdwardsManaging Director, UK

Matthew EdwardsManaging Director, UK